Deep Learning, which makes use of various types of Artificial Neural Networks, is the paradigm that has the potential to grow AI at an exponential rate. There’s a lot of hype going on around Neural Nets and people are busy in researching and making them useful to their potential.

The title of this blog might seem obscure to some of the readers but consider an example, humans have evolved from apes, and while evolving, we’ve forgotten some information or knowledge or a particular experience which is no longer useful or needed. In particular, humans have the extraordinary ability to constantly update their memories with the most important knowledge while overwriting information that is no longer useful. The world is full of trivial experiences or provides knowledge which is irrelevant or does little help to us, hence we forget those things or rather ignore those incidences.

The same cannot be said of machines. Any skill they learn is quickly overwritten, regardless of how important it is. There is currently no reliable mechanism they can use to prioritize these skills, deciding what to remember and what to forget. Thanks to Rahaf Aljundi and pals at the University of Leuven in Belgium and at Facebook AI Research. These guys have shown that the approach biological systems use to learn and to forget, can work with artificial neural networks too.

The key is a process known as Hebbian learning, first proposed in the 1940s by the Canadian psychologist Donald Hebb to explain the way brains learn via synaptic plasticity. Hebb’s theory can be famously summarized as “Cells that fire together wire together.”

What is Hebbian Learning?

Hebbian learning is one of the oldest learning algorithms and is based in large part on the dynamics of biological systems. A synapse between two neurons is strengthened when the neurons on either side of the synapse (input and output) have highly correlated outputs. In essence, when an input neuron fires, if it frequently leads to the firing of the output neuron, the synapse is strengthened. Following the analogy to an artificial system, the tap weight is increased with high correlation between two sequential neurons.

Artificial neural nets being taught what to forget

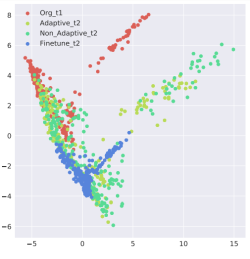

In other words, the connections between neurons grow stronger if they fire together, and these connections are therefore more difficult to break. This is how we learn—repeated synchronized firing of neurons makes the connections between them stronger and harder to overwrite. The team did this by measuring the outputs from a neural network and monitoring how sensitive they are to changes in the connections within the network. This gave them a sense of which network parameters are most important and should, therefore, be preserved. “When learning a new task, changes to important parameters are penalized,” said the team. They say the resulting network has “memory aware synapses.”

Neural networks with memory aware synapses turn out to perform better in these tests than other networks. In other words, they preserve more of the original skill than networks without this ability, although the results certainly allow room for improvement.

The Paper: arxiv.org/abs/1711.09601 : Memory Aware Synapses: Learning What (Not) To Forget.

(via: MitTechReview, Wikipedia, arxiv)

Amazing.

LikeLike