We all know the hype that’s currently running around Quantum Computer, their working and a plethora of discoveries happening in Quantum Computing. Recommended: Quantum Computing, Quantum Computer Memories.

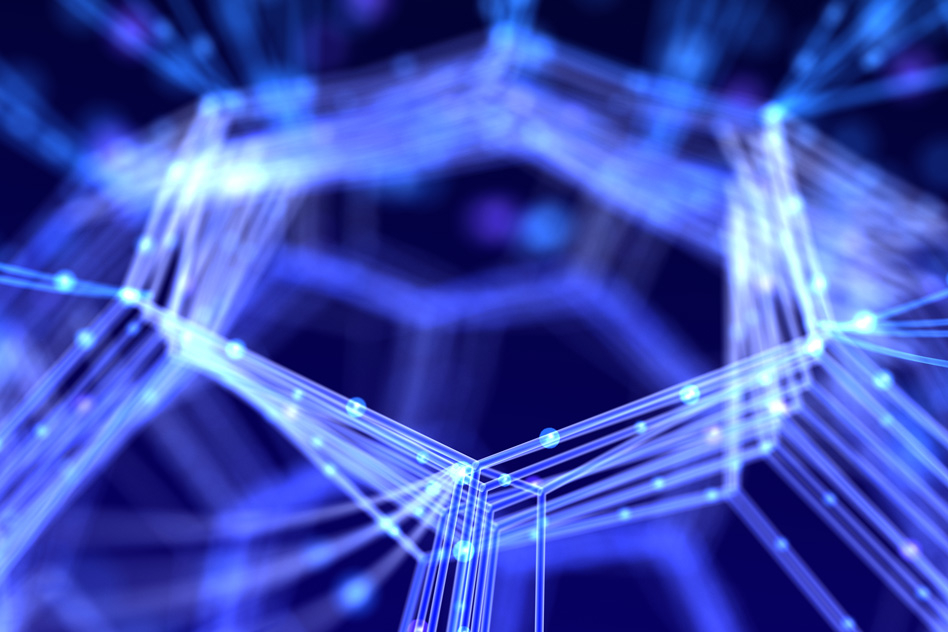

Recently, there has been news about a Quantum Network or rather, Quantum Internet. So what’s Quantum Network? Quantum networks form an important element of quantum computing and quantum communication systems. In general, quantum networks allow for the transmission of quantum information (quantum bits, also called qubits), between physically separated quantum processors. A quantum processor is a small quantum computer being able to perform quantum logic gates on a certain number of qubits.

Being able to send qubits from one quantum processor to another allows them to be connected to form a quantum computing cluster. This is often referred to as networked quantum computing or distributed quantum computing. Here, several less powerful quantum processors are connected together by a quantum network to form one much more powerful quantum computer. This is analogous to connecting several classical computers to form a computer cluster in classical computing. Networked quantum computing offers a path towards scalability for quantum computers since more and more quantum processors can naturally be added over time to increase the overall quantum computing capabilities. In networked quantum computing, the individual quantum processors are typically separated only by short distances.

Going back a year, Chinese physicists launched the world’s first quantum satellite. Unlike the dishes that deliver your Television shows, this 1,400-pound behemoth doesn’t beam radio waves. Instead, the physicists designed it to send and receive bits of information encoded in delicate photons of infrared light. It’s a test of a budding technology known as quantum communications, which experts say could be far more secure than any existing info relay system. If quantum communications were like mailing a letter, entangled photons are kind of like the envelope: They carry the message and keep it secure. Jian-Wei Pan of the University of Science and Technology of China, who leads the research on the satellite, has said that he wants to launch more quantum satellites in the next five years.

The basic structure of a quantum network and more generally a quantum internet is analogous to classical networks. First, we have end nodes on which applications can ultimately be run. These end nodes are quantum processors of at least one qubit. Some applications of a quantum internet require quantum processors of several qubits as well as a quantum memory at the end nodes.

Second, to transport qubits from one node to another, we need communication lines. For the purpose of quantum communication, standard telecom fibers can be used. For networked quantum computing, in which quantum processors are linked at short distances, one typically employs different wavelength depending on the exact hardware platform of the quantum processor.

Third, to make maximum use of communication infrastructure, one requires optical switches capable of delivering qubits to the intended quantum processor. These switches need to preserve quantum coherence, which makes them more challenging to realize than standard optical switches.

Finally, to transport qubits over long distances one requires a quantum repeater. Since qubits cannot be copied, classical signal amplification is not possible and a quantum repeater works in a fundamentally different way than a classical repeater.

The quantum internet could also be useful for potential quantum computing schemes, says Fu. Companies like Google and IBM are developing quantum computers to execute specific algorithms faster than any existing computer. Instead of selling people personal quantum computers, they’ve proposed putting their quantum computer in the cloud, where users would log into the quantum computer via the internet. While running their computations, they might want to transmit quantum-encrypted information between their personal computer and the cloud-based quantum computer. “Users might not want to send their information classically, where it could eavesdrop,” Fu says. But still, this technology is almost 13 years away and surely we will witness a lot more discoveries in the coming years.

(source : MitTechReview, Wikipedia, Wired)