Processors are of crucial importance in this digital age as their vitality in this computational era is unparalleled. The device you are reading this blog on and your smartwatch you see your time on, every device has a processor. These processors run the processes that are essential to show you your notification, run an application, play games as well as check some emails. As they run all the essential processes on your computer, these silicon chips handle extremely sensitive data. That includes passwords and encryption keys, the fundamental tools for keeping your computer secure.

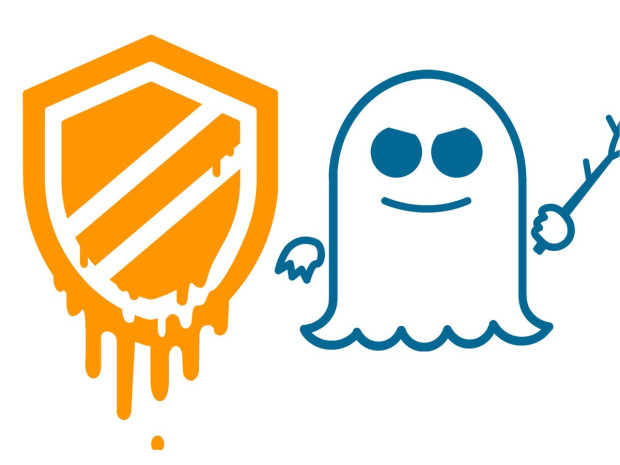

The Spectre and Meltdown vulnerabilities, revealed a few days before could let attackers capture the information they shouldn’t be able to access, like your passwords and keys. As a result, an attack on a computer chip can turn into a serious security concern.

Meltdown and Spectre

So what’s Spectre?

Spectre attacks involve inducing a victim to speculatively perform operations that would not occur during correct program execution and which leak the victim’s confidential information via a side channel to the adversary. To make computer processes run faster, a chip will essentially guess what information the computer needs to perform its next function. That’s called speculative execution. As the chip guesses, that sensitive information is momentarily easier to access. In brief, Spectre is a vulnerability with implementations of branch prediction that affects modern microprocessors with speculative execution. Spectre is a vulnerability that forces programs on a user’s operating system to access an arbitrary location in the program’s memory space.

The Spectre paper displays the attack in four essential steps:

- First, it shows that branch prediction logic in modern processors can be trained to reliably hit or miss based on the internal workings of a malicious program.

- It then goes on to show that the subsequent difference between cache hits and misses can be reliably timed so that what should have been a simple non-functional difference can, in fact, be subverted into a covert channel which extracts information from an unrelated process’s inner workings.

- Thirdly, the paper synthesizes the results with return-oriented programming exploits and other principles with a simple example program and a JavaScript snippet run under a sandboxing browser; in both cases, the entire address space of the victim process (i.e. the contents of a running program) is shown to be readable by simply exploiting speculative execution of conditional branches in code generated by a stock compiler or the JavaScript machinery present in an extant browser.

- Finally, the paper concludes by generalizing the attack to any non-functional state of the victim process. It briefly discusses even such highly non-obvious non-functional effects as bus arbitration latency.

And What’s Meltdown?

In this form of attack, the chip is fooled into loading secured data during a speculation window in such a way that it can later be viewed by an unauthorized attacker. The attack relies upon a commonly-used, industry-wide practice that separates loading in-memory data from the process of checking permissions. Again, the industry’s conventional wisdom operated under the assumption that the entire speculative execution process was invisible, so separating these pieces wasn’t seen as a risk.

In Meltdown, a carefully crafted branch of code first arranges to execute some attack code speculatively. This code loads some secure data to which the program doesn’t ordinarily have access. Because it’s happening speculatively, the permission check on that access will happen in parallel (and not fail until the end of the speculation window), and as a consequence special internal chip memory known as a cache becomes loaded with the privileged data. Then, a carefully constructed code sequence is used to perform other memory operations based upon the value of the privileged data. While the normally observable results of these operations aren’t visible following the speculation (which ultimately is discarded), a technique known as cache side-channel analysis can be used to determine the value of the secure data.

The basic difference between Spectre and Meltdown is that Spectre can be used to manipulate a process into revealing its own data. On the other hand, Meltdown can be used to read privileged memory in a process’s address space which even the process itself would normally be unable to access (on some unprotected OS’s this includes data belonging to the kernel or other processes).

(via Wiki, cnet, spectreattack, meltdownattack, redhat, wired)