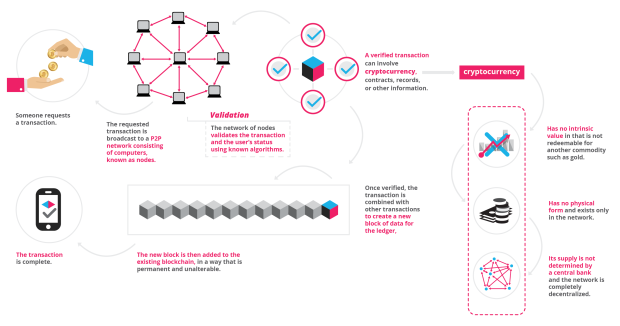

With all the hype going around Blockchain and it’s infrastructure users like Bitcoin and Ethereum are all over the media and magazines I had to write a blog on Ethereum. Briefly, Blockchain is to Bitcoin, what the internet is to email. A big electronic system, on top of which you can build applications.

Then what’s Ethereum? It is a public database that keeps a permanent record of digital transactions. Importantly, this database doesn’t require any central authority to maintain and secure it.

Like Bitcoin, Ethereum is a distributed public blockchain network. Although there are some significant technical differences between the two, the most important distinction to note is that Bitcoin and Ethereum differ substantially in purpose and capability. Bitcoin offers one particular application of blockchain technology, a peer to peer electronic cash system that enables online Bitcoin payments. While the Bitcoin blockchain is used to track ownership of digital currency (bitcoins), the Ethereum blockchain focuses on running the programming code of any decentralized application.

In the Ethereum blockchain, instead of mining for bitcoin, miners work to earn Ether, a type of crypto token that fuels the network. Beyond a tradeable cryptocurrency, Ether is also used by application developers to pay for transaction fees and services on the Ethereum network.

The Ethereum blockchain is essentially a transaction-based state machine, which means that on a series of specific inputs, it will transition to a new state. The first state in Ethereum is the genesis state, which means a blank state, wherein no transactions have happened on the network. After a transaction occurs, the genesis state transitions to a new state, possibly a final state denoting the current state of Ethereum. Of course, there are millions of transactions occurring concurrently, whereby these transactions are grouped in Blocks. So to simplify, a Block contains a series of transactions.

To cause a transition from one state to the next, a transaction must be valid. For a transaction to be considered valid, it must go through a validation process known as mining. Mining is when a group of nodes (i.e. computers) expend their compute resources to create a block of valid transactions. In the most basic sense, a transaction is a cryptographically signed piece of instruction that is generated by an externally owned account, serialized, and then submitted to the blockchain.

Any node on the network that declares itself as a miner can attempt to create and validate a block. Lots of miners from around the world try to create and validate blocks at the same time. Each miner provides a mathematical “proof” when submitting a block to the blockchain, and this proof acts as a guarantee: if the proof exists, the block must be valid.

For a block to be added to the main blockchain, the miner must prove it faster than any other competitor miner. The process of validating each block by having a miner provide a mathematical proof is known as a “proof of work.”

A miner who validates a new block is rewarded with a certain amount of value for doing this work. What is that value? The Ethereum blockchain uses an intrinsic digital token called “Ether.” The value token of the Ethereum blockchain is called ether. It is listed under the code ETH and traded on cryptocurrency exchanges. It is also used to pay for transaction fees and computational services on the Ethereum network. Every time a miner proves a block, new Ether tokens are generated and awarded. Ether can be transferred between accounts and used to compensate participant nodes for computations performed.

EVM – Ehtereum Virtual Machine

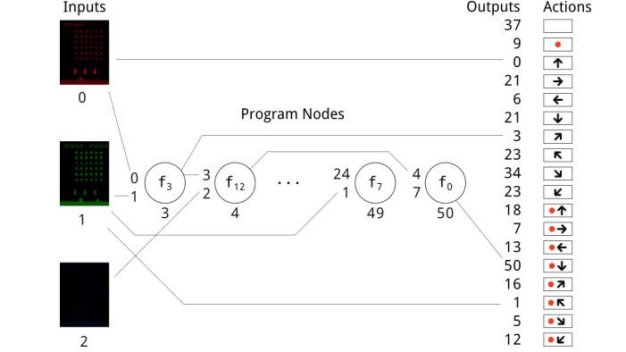

Ethereum is a programmable blockchain. Rather than give users a set of pre-defined operations (e.g. bitcoin transactions), Ethereum allows users to create their own operations of any complexity they wish. In this way, it serves as a platform for many different types of decentralized blockchain applications, including but not limited to cryptocurrencies.

Ethereum in the narrow sense refers to a suite of protocols that define a platform for decentralized applications. At the heart of it is the Ethereum Virtual Machine (“EVM”), which can execute code of arbitrary algorithmic complexity. In computer science terms, Ethereum is “Turing complete”. Developers can create applications that run on the EVM using friendly programming languages modeled on existing languages like JavaScript and Python.

Smart Contracts

Smart contracts are deterministic exchange mechanisms controlled by digital means that can carry out the direct transaction of value between untrusted agents. They can be used to facilitate, verify, and enforce the negotiation or performance of economically-laden procedural instructions and potentially circumvent censorship, collusion, and counter-party risk. In Ethereum, smart contracts are treated as autonomous scripts or stateful decentralized applications that are stored in the Ethereum blockchain for later execution by the EVM. Instructions embedded in Ethereum contracts are paid for in ether (or more technically “gas”) and can be implemented in a variety of Turing complete scripting languages.

There’s still a lot more to Ethereum, but this blog will help you get some insights before delving in deeper in the utopian world of blockchain and cryptocurrency.

(sources: Wiki, Telegraph, Medium, ethdocs)